I am a Physicist, Researcher, Senior Artificial Intelligence/Machine Learning Research Engineer. Currently, I am working on my research projects (see Interests) and collaborating on Machine Learning related projects.

I did my undergraduate and masters degrees in Physics at the Budapest University of Technology and Economics. My undergraduate supervisor was Dénes Petz and my topic was related to Quantum Information Theory and Quantum Metrology.

During my masters in Theoretical Physics, I was working on low dimensional systems. I was briefly investigating critical slowing down in Random cluster models with Gábor Pete. Related to my Thesis I first worked on Luttinger liquids from a Condensed Matter Physics point of view (with Gergely Zaránd and Balázs Dóra), then on Integrable Quantum Field Theories with Zoltán Bajnok.

I gained valuable work experience and participated in various projects while being a consultant at Wolfram Research, researcher at Heloro s.r.o. energy company, module leader and mentor at Milestone Institute, and most recently AI/ML research engineer at Imagination Technologies. (See more details in Projects and Teaching.)

When I'm not doing work, research or teaching, I ride my waveboard, cook some vegetarian or pescatarian dish or appreciate nature while hiking, cycling or gardening. If I have a chance to go underwater, I do free- or scuba diving.

See my latest CV for a more detailed research/educational background and work experience.

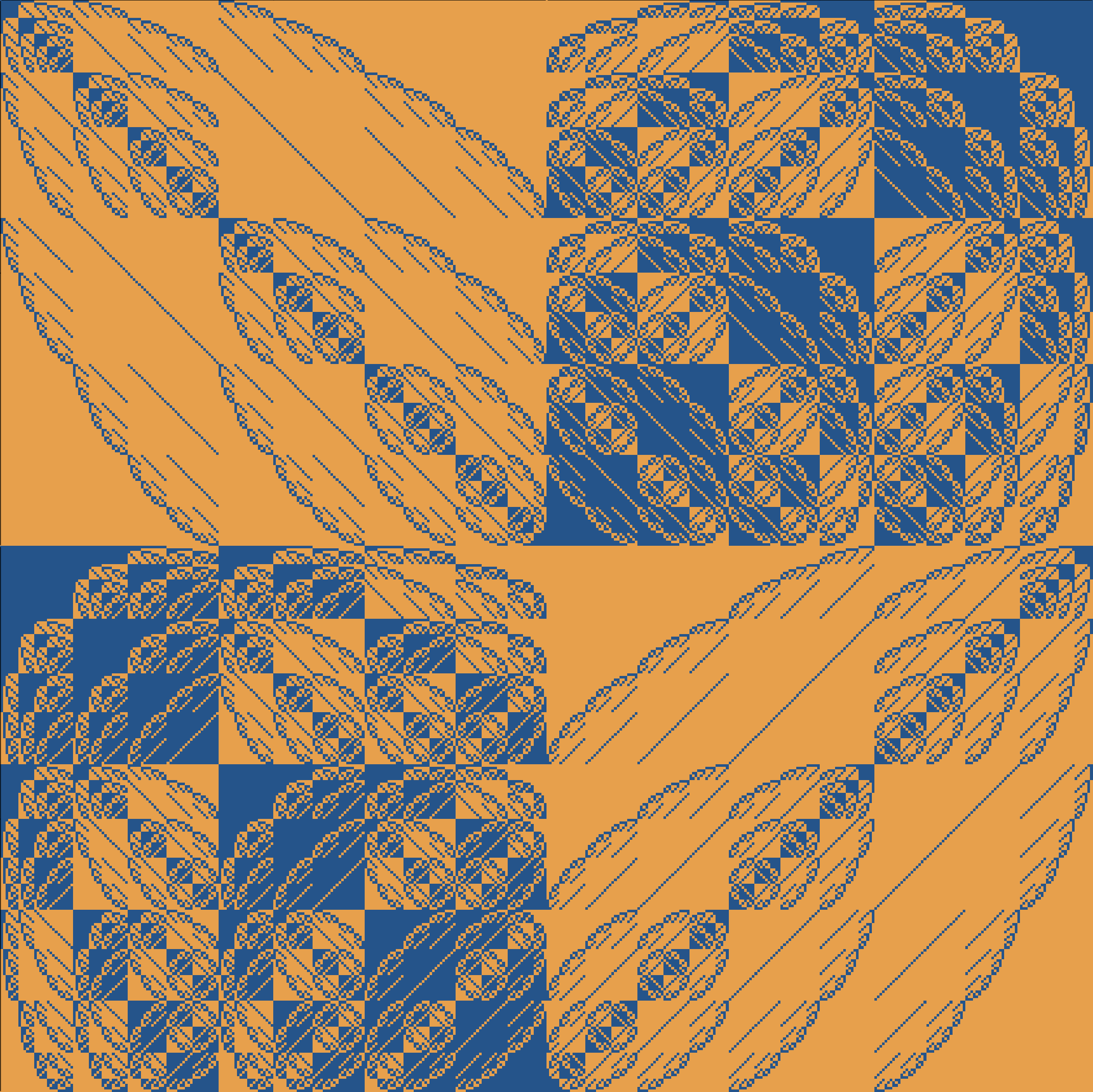

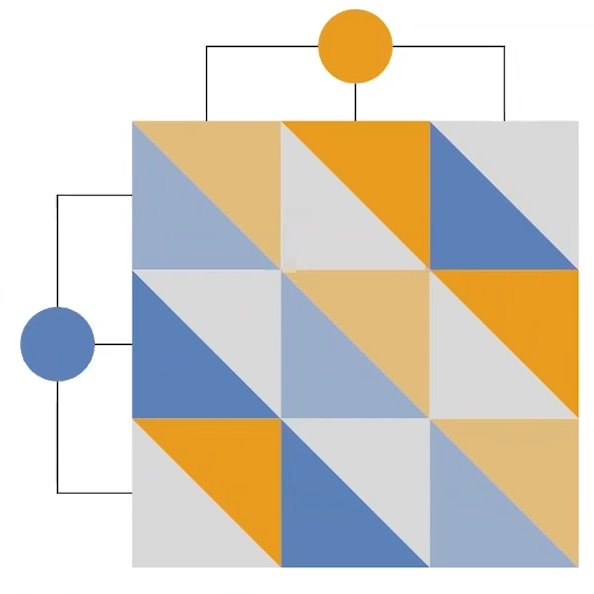

The investigation and exploration of Statistical Games has been motivated by another interest; Interpretation of Probability. Although the project can be viewed as the simplest nontrivial example of a general framework constructed for formalizing uncertainty, the results for guessing and betting games are interesting in their own right.

A manuscript has been uploaded to arXiv containing some concrete mathematical results. Hopefully, the introduced concepts and the investigated toy model will serve as a broad enough platform to support more useful and more general statistical tools mentioned in the section "Future work and extension".

See the manuscript on arXiv:2402.15892.

See the project's GitHub repository for further resources.

For more details see the Projects section.

"Probability is the very guide of life", however, there are many ways to interpret the concept, and its guidance.

The standard Bayesian interpretation suffers from the lack of justification for prior choices. There have been many attempts to find appropriate principles for Objective Bayesianism (Probability Theory by E. T. Jaynes, Bayesian Theory by José M. Bernardo, Adrian F. M. Smith), besides the construction of a Subjective Bayesian alternative pioneered by Savage and de Finetti.

Von Neumann's minimax theorem and the framework of game theory served another possible justification of inference methods. The 1950 book by Abraham Wald defines inference as an optimal strategy followed by "Experimenter" who plays a zero-sum game against "Nature". In this early work, only a quadratic loss function was analysed; however, independently, R. L. Kashyap investigated zero-sum games with relative entropy loss function in the 70's (see Kashyap1971, Kashyap1974).

Bayesian methods are becoming increasingly popular in Machine Learning and engineering applications due to sufficient computational power. However, the choice of prior is often not well justified, which can cause problems mainly in data-scarce situations. A loss function based approach can increase accuracy on small data sets. It would also improve the accuracy of prior dominated models, which are typically the ones having many parameters.

See my Research Proposal on the topic.

Related topics:

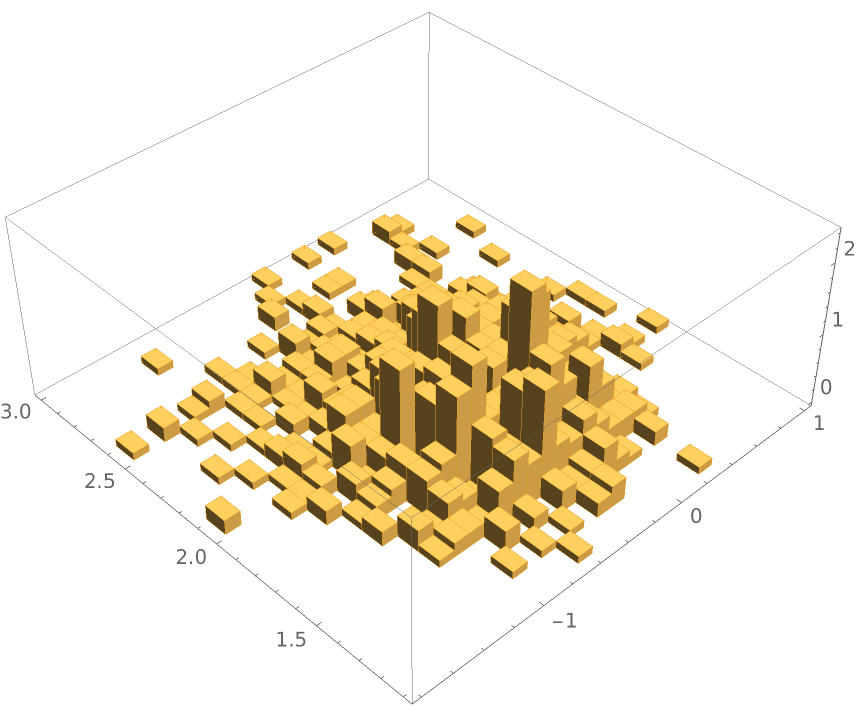

Verified computation (also known as Validated numerics) is a collection of methods by which one can give exact error bounds after finite computation.

I am mostly interested in exact error bounds of the spectrum of (potentially infinite dimensional) operators, which is a common problem in Quantum Mechanics and Quantum Field Theory.

(The figure on the right shows the result of the calculation of the first 3 energy levels of an anharmonic quantum oscillator, as a function of the coefficient of the quartic term. Energy eigenstates were determined by 1. order renormalized perturbation theory, while the intervals for the spectrum were calculated nonperturbatively by giving an upper bound for the ground state of the operator (H-E)2 using the eigenstate estimation.)

An incomplete list of techniques:

Theory of everything is probably the Holy Grail of Theoretical Physics. As a PhD student, I wanted to push my limits and understand the most fundamental and most advanced theories about our Universe. During my education I got familiar with the Standard Model of particle physics, General relativity and got an introduction to String theory. My work was related to the AdS/CFT correspondence (more as a mathematical technique, then a view for the real world).

Even as someone who used mostly the formalism of String Theory, I don't think that only this framework (e.g. M-theory) can function as a possible Fundamental Theory. I consider myself an "agnostic theoretical physicist", meaning that in my view, by physical theories, we can gain compact and/or predictive descriptions of the world, but not necessarily by finding the ultimate building blocks and universal governing laws of the Universe. Modelling, in general, is valuable because it can help us navigate, manipulate and understand the world. For modelling, different frameworks have been and are being used: human language, mathematics, computation... Signalling that our theories always blend our own thinking with real-world phenomena.

In this light, fundamental theories might appear in many forms. Different forms can even have different advantages. For example:

a gas can be modelled by colliding particles, – or by flowing currents and correlated densities;

classical mechanics can be formulated by Newton's equations, – or by the principle of least action;

quantum-mechanical systems can be described by Schrödinger's wave function, – or by Feynman's path integral formulation;

(see more advanced dualities in theoretical physics).

In my view, the main function of theoretical physics is to generate different theories or frameworks and calculate their predictions. Further, it might estimate the (computational and/or algorithmic) complexity of the theories and explore the connections between different theoretical models.

This generative process can stay playful only if it is a part of a broader scientific process. Physics as a discipline needs to contrast Theories by Experiments, which can filter out, or at least favour-disfavour theoretical models. An incomplete list of respectable experimental results consists: Particle Data Group, Bell experiment(s) the measurements of the Cosmic microwave background by Planck telescope, gravitational wave experimental data from LIGO, redshift survey by Sloan Digital Sky Survey, Galaxy rotation curve measurements, data from Gamma Ray Bursts.

An incomplete list of fundamental theory suggestions:

Of course, there might be flaws with a theory or a model, even before contrasted by experiments. A theory can be seriously inconsistent, misguided, overrated, there can be sociological factors, etc. However, in my view, even a flawed theory can serve as an inspiration or might be useful in a different area.

Personally, I am mostly interested in the connections between different frameworks, and I appreciate seeing similar or the same constructions from different viewpoints.

A brief summary of my own thoughts:

See further details and more statistics on INSPIRE-HEP, Semantic Scholar or Google Scholar.

The following functions are part of the Wolfram Function Repository and are written in Wolfram Language.

Generate a sequence of values using the Metropolis–Hastings Markov chain Monte Carlo method. (See further references in section: Source Metadata.)

The function can be used in Mathematica by calling: ResourceFunction["MetropolisHastingsSequence"]

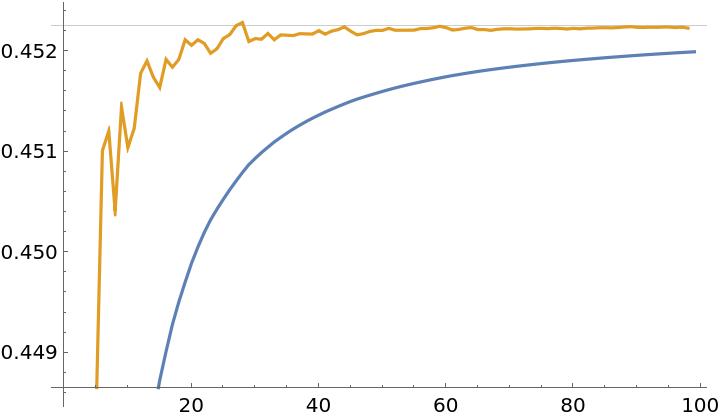

Calculate an accelerated version of a symbolic sequence using Richardson extrapolation. (See further references in section: Source Metadata.)

The function can be used in Mathematica by calling: ResourceFunction["RichardsonExtrapolate"]

The module has two main ambitious goals:

i.) It aims to guide students through several model making processes, where we take real world problems from different fields, and build an approximative mathematical and/or computational models by which we predict and optimise. (An essential part of working with models, is to know their domain of validity, which will be determined critically, and sometimes extended iteratively.)

ii.) In this module we will work with real world (and sometimes generated) data, look it from different angles, process it, extract information by visualisation, and computation. In several cases, we will go through how to draw conclusions, optimise or do predictions based on data.

The module was held in the autumn term of 2021-2022 in cooperation with Wolfram Research in the Milestone Institute.

The Data Science Course github repository contains Presentation notebooks (both in Python and Wolfram Language).

What is order? Are there materials, processes or systems that are more ordered than other ones? Are chaotic processes unpredictable? How did complexity emerge and what has it to do with life? The module tries to give answers to these and related questions through the lenses of thermodynamics and modern physics.

The module was held in the autumn term of the 2020-2021 year together with Dávid Komáromy in the Milestone Institute.

Mechanics is a fundamental building block of other more complex Engineering and Physics courses. Most students encounter it very early in high school. However, there is a wide gap between mechanics in high school and university. The aim of this course is to cover the broad range of mechanics in a mathematically more formal way to bring the students closer to the level required in university.

The module was held in the spring term of the 2019-2020 year in the Milestone Institute.

The aim of this module is to give an introduction to Thermodynamics, and to related modern concepts like Universality, Complexity and Emergence.

The module was held in the spring term of the 2018-2019 year in the Milestone Institute.

The module focuses on the Physics Aptitude Test (PAT) for applicants to Engineering, Materials Science and Physics at the University of Oxford, and on the Engineering Admissions Assessment (ENGAA) for applicants to the University of Cambridge Engineering undergraduate degree course. The main goal of the module is to achieve a successful test result on the mentioned tests.

The module was held in the summer term of the 2020-2021 year and autumn term of the 2018-2019 year in the Milestone Institute.

I was a Teaching Assistant for the Virtual 2020 Wolfram Summer School. I helped mostly for students taking the Physics track by answering the upcoming questions through chat, and providing relevant scientific literature for their projects. (See a blog post summarizing the event.)

This talent-nurturing problem-solving seminar was designed for curious and motivated students, who were interested in extracurricular work and wanted to keep their problem-solving skills in shape. During the semester, attendees got a list of challenging and interesting physics problems that they could solve at home, and they presented their results during the seminar sessions.

The seminar was held from 2013 to 2016 together with Miklós Antal Werner and later with Sándor Kollarics and Gábor Csősz as an extracurricular activity organized by Eugene Wigner College of Advanced Studies.

See my old academic teaching page for some of the discussed problems (available in Hungarian).

This extracurricular activity was resurrected as "Feynman seminar" in 2018 by Zsolt Györgypál and continued by Dávid Szász-Schargin.

The structure of the seminar was inspired by the Pólya-Szegő Seminar and by the Hungarian Physics Olympiad Seminar.

Topics: Electromagnetism, Optics, Relativity and Quantum mechanics.

Demonstrations were held in 2009 and 2012 autumn terms for 3rd year BSc students of Faculty of Electrical Engineering and Informatics (VIK) of the Budapest University of Technology and Economics (BME).

The suggested literature for the lectures were University Physics (volume II) by Alvin Hudson and Rex Nelson.

Some details are preserved on my old academic teaching page (available in Hungarian).

The class has been rated on Mark my Professor.

An independent theoretical research project related to the Interpretation of Probability. The project resulted in a manuscript so far, which is accessible on arXiv:

Abstract: This work contains the mathematical exploration of a few prototypical games in which central concepts from statistics and probability theory naturally emerge. The first two kinds of games are termed Fisher and Bayesian games, which are connected to Frequentist and Bayesian statistics, respectively. Later, a more general type of game is introduced, termed Statistical game, in which a further parameter, the players' relative risk aversion, can be set. In this work, we show that Fisher and Bayesian games can be viewed as limiting cases of Statistical games. Therefore, Statistical games can be viewed as a unified framework, incorporating both Frequentist and Bayesian statistics. Furthermore, a philosophical framework is (re-)presented -- often referred to as minimax regret criterion -- as a general approach to decision making. The main motivation for this work was to embed Bayesian statistics into a broader decision-making framework, where, based on collected data, actions with consequences have to be made, which can be translated to utilities (or rewards/losses) of the decision-maker. The work starts with the simplest possible toy model, related to hypothesis testing and statistical inference. This choice has two main benefits: i.) it allows us to determine (conjecture) the behaviour of the equilibrium strategies in various limiting cases ii.) this way, we can introduce Statistical games without requiring additional stochastic parameters. The work contains game theoretical methods related to two-player, non-cooperative games to determine and prove equilibrium strategies of Fisher, Bayesian and Statistical games. It also relies on analytical tools for derivations concerning various limiting cases.

See the manuscript on arXiv:2402.15892.

For more context see a promo post on LinkedIn and the project's GitHub repository.

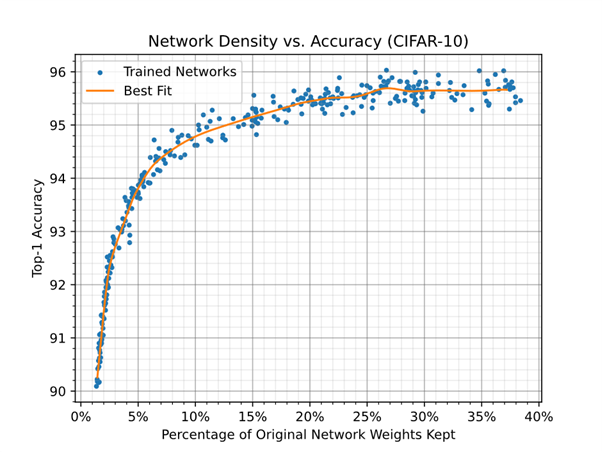

In 2023, I presented the results of a small research project titled Self-Compressed Nano GPT, prepared for the Eastern European Machine Learning Summer School (EEML):

Abstract: The proposed project is the continuation and generalization of the paper: Self-Compressing Neural Networks. The main contribution of the paper is a novel quantization-aware training (QAT) method. The approach can be used both to compress models during training, and to compress pretrained models. The experiments of the original paper were conducted on visual models (classification on CIFAR10 dataset), which I would extend and generalize to natural language processing models and tasks.

The project has been carried out at Imagination Technologies together with Timothy Gale and James Imber. The results were presented on a poster session of EEML23.

See the presented poster titled Self-Compressed Nano GPT.

For more see the project's Extended Abstract and a promo post on LinkedIn.

For further context and resources see the works of Szabolcs Cséfalvay and James Imber:

The original architecture of the network was based on Andrej Karpathy's nanoGPT:

Game Theory is a broad subject, dealing with situations, in which players (or agents) can influence each others' outcomes by their actions. (Or, shortly, the study of strategically interdependent behaviour.) As a mathematical theory, it adopts some assumptions about the so-called rationality of the agents, and it assumes that one can associate the players' utilities with the outcomes.

After all these nontrivial assumptions and modelling problems, Game Theory aims to predict the behaviour of the players in the form of so-called strategies. Probably the most well-known equilibrium strategy concept is the Nash equilibrium, which stands for a set of strategies of the players, in which no player can gain more by individually changing their strategy.

Game Theory was originally proposed to model the economic behaviour of rational agents. Besides the introduction of influential concepts in economics and finance, it provided useful tools in other human-related fields such as sociology, politics and military strategy. The framework appeared to be applicable in evolutionary biology (also known as evolutionary game theory), information theory and control theory as well.

For me, Game Theory is especially interesting because in game theoretic reasoning, mixed strategies, i.e. the concept of probability, can naturally emerge in otherwise completely deterministic settings. In my view, this peculiar property makes the framework possible to give an interpretation of (subjective) probability.

The project began with two Language Design in Wolfram Language Live CEOing sessions with Stephen Wolfram:

A more developed stage has been shown during the Wolfram Technology Conference 2021

Good introductory lectures I can suggest:

Books I can recommend:

Already existing software and solvers:

This applied research project was founded by the European Regional Development Fund and realized at Heloro s.r.o. together with Botond Sánta et al.

Thermoelectric Generators (TEG) utilize the observation that in some materials, heat flow can induce an electric current (also known as Peltier–Seebeck effect effect).

In commercially available TEG modules, usually a pair of n-p semiconductors are used:

which can convert heat flow to electric power with roughly 1.5-2% /(100 ℃) efficiency. Meaning that the efficiency increases approximately by 1.5-2% if the temperature difference increases by 100 ℃ between the hot and cold side of the module. (Hereby is a Performance Calculator of a commercial provider Hi-Z.)

Waste heat is a practically unavoidable side product of all energy-converting or transferring activities. The waste heat potential in the European Union has been estimated to ~1000 TWh/year in heat or ~200 TWh/year in (electrical or other) power. This compared with the approx 2000-3000 TWh/year Gross electricity production in the EU signals a significant potential to increase energy efficiency.

District heating is a centralized and potentially efficient way to distribute heating and hot water in cities. It is ranked #27 on Project Drawdown's 100 solutions to global warming. District heating can be combined with many other technologies, for instance, with Cogeneration also known as combined heat and power (which itself is ranked #50 at Drawdown). Cogeneration in District heating can be realized, for example by using gas engines coupled with an electric generator. The "waste heat" coming from the engine can be used to preheat the incoming cold water, by which the process achieves much higher efficiency. Commercially available units are provided by, for instance TEDOM a.s..

The main goal of the project is to use TEGs to make cogeneration even more effective and utilize the heat flow from the engine to the water cooling. This could increase efficiency by roughly 5% and function as a mountable energy-recovering unit with low maintenance costs.

There are many further opportunities in combining heat and electric power production, including possibly playing a regulatory role for intermittent renewable energy sources (e.g. Solar and Wind energy).

Compatibility with Hydrogen: the heat source in district heating systems is usually fossil fuels; however, the described technology can be applied when the energy source is, for example, Green Hydrogen. The effectivity of usage of Hydrogen still needs further research, but some (for example Zero Waste Scotland) suggests that it can be an alternative for natural gas (Commercially available cogeneration units are already compatible with fuels blended with hydrogen.)

For those who are interested in the technological and scientific details:

Results of the project:

This document is a summary based on my experience as a Mentor at the Milestone Institute, as a former high school student at a Hungarian school in Slovakia, and University student at BME and ELTE in Budapest. (I graduated as a Theoretical Physicist and then worked at Wolfram Research.)

I started a PhD at Eötvös Loránd University as a member of Holographic Quantum Field Theory Group and as an assistant research fellow at Wigner Research Centre for Physics.

During these years, I worked on calculations exploiting Integrability in some sectors of models related by the AdS/CFT duality. (See this review series on AdS/CFT Integrability.) Our work culminated in a relatively long paper, where we solved numerically the so-called Quantum Spectral Curve equations with high precision and matched with the perturbative calculations in the conjectured dual theory. (For those who are interested in the topic, I suggest Christian Marboe's thesis)

During these years, I was also a visiting researcher at the Jagiellonian University in Krakow, Poland in the group of Romuald Janik and in Tokyo Institute of Technology in Japan in the group of Katsushi Ito.

The collection of graphics and photos can be viewed on Bēhance:

Animation on my YouTube channel: